There’s a bit of buzz lately about the rise of AI (artificial intelligence) in writing. This is something that has been increasingly used in website design and marketing. At this point, any student can access AI tools to generate writing. It’s as easy as copying and pasting questions from your assignment and hitting enter. The AI tools will generate a unique, well-written composition that meets the parameters of the question. If you enter a question, it will answer it. If you tell it to do so in 4 sentences, it will give you 4 sentences. If you add “give 3 pieces of evidence”, it will give you 3 pieces of evidence. If you ask the AI to explain the answer in words that a 5th grader would use, it will even do that.

The tool will uniquely generate the writing using an AI technology called GPT-3, so it will fool any plagiarism checkers. If you google sections of the text, you will not find results that match exactly.

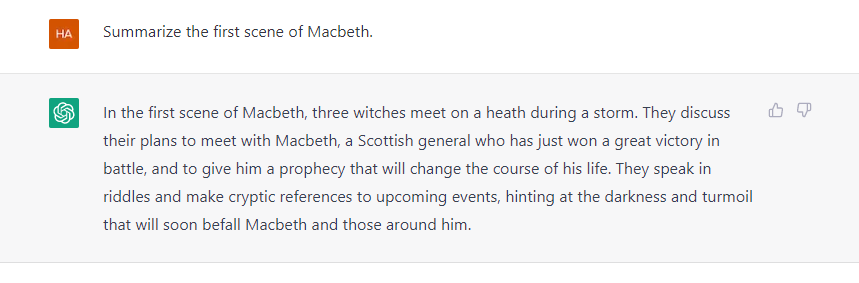

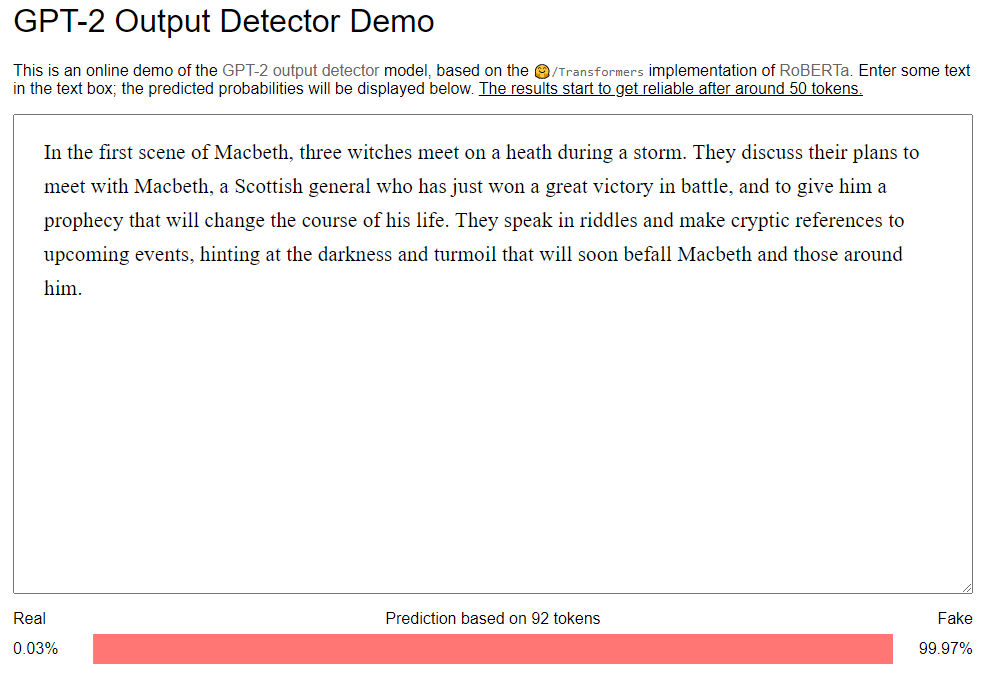

There are some tools available to counter this in cases where you suspect that a student’s writing is generate by GPT, including this GPT-2 Output Detector Demo that Troy Patterson introduced me to. We tested this out, adding text that we had generated with GPT-3. The detector indicated, accurately, that the writing was fake. Here’s how the passage above checks out:

We likewise posted some text that I had written on a previous blog post, and the results were exactly the opposite. It indicated that it was “98% Real”. We then tried out some text from an article from a local newspaper, and found similar results. There was, however, another article we tested which indicated that it was just over “60% Real”. The article was presumably written by a real person. But perhaps they used GPT to enhance. Or perhaps they used GPT to write the bulk of the passage and supplemented with their own writing. Either way, while this tool may give us some indicators of suspected AI use, it’s going to be really difficult to determine just how reliable it actually is.

So what’s a teacher to do?

How do we counter this? Some have suggested that we only accept hand-written samples from students. That could work–as long as the students write it in front of us and not at home, in the hallway, or in another classroom where they could be using GPT to generate the text, then copy it off the screen.

There are questions, however, that AI tools will not answer. Questions that ask for a subjective answer, such as an opinion or belief, will return a response in most AI tools that I’ve tried in which the tool essentially declines to answer. So another approach is to frame our questions in the context of something along the lines of “Explain your opinion on ______ and support it with 3 pieces of evidence that informed your opinion.” The problem of assessment thus ensues: how do we grade a student’s response to a subjective question objectively? The key is that there are, indeed, objective measures in a prompt such as the example I gave above.

Either way, one thing I know for sure: AI needs to begin to be a factor in our discussions of academic integrity.

What we definitely should not do

For one thing, teachers definitely should not jump to conclusions that text was generated by AI when a student turns in a well-written piece of work–even if we run it through the detector. As I mentioned, this is not necessarily reliable. It will not, as they say, hold up in court. We also shouldn’t assume that students are not using AI in their work. Because if they aren’t now, they will be soon.

Can we banish it from our classrooms? Sure, we could try. But students will find ways around it if they really want to. I stress if they really want to, because if we can empower them to not even want to use it in the first place by supporting their learning, valuing the process of learning and writing just as much as the end product, then maybe they won’t want to. But even that doesn’t really sound all that realistic. We can–and should, probably–have distinct times when technology is not allowed to be used in our classrooms. But a wholesale elimination of technology from the learning environment removes all the countless other redeeming qualities of educational technology from students who could be empowered by it, thereby limiting their opportunities. There are words for this.

Can we use it for good?

Troy Patterson pondered at the end of one of his recent blog posts on the subject, “So what does the future look like? Actually, that’s the wrong question. What does life look like today? Should we be taking advantage of these tools? Should we be teaching kids to utilize them for their benefit?”

Can we use this to help kids write? Maybe. I’m not sure how…yet. But one thing I’ve learned about technology is that the absolute incorrect approach is to deny it exists, to fight it, ban it, or whatever negative thing you want to do to it. It’s here to stay, and it will only progress. The best approach is to figure out how to work with it and to allow it to actually solve problems in our classrooms–even if we have to reframe what the problems are. Believe it or not, there were people who actually wanted to “ban Google” from classrooms. Meanwhile, there were others changing their questions to things like “When you Google Macbeth, what themes emerge from the results of the search? How do these compare to the themes we discussed in class?” Imagine a situation in which the prompt now becomes, “Generate a paragraph on scene 1 of Macbeth using AI. What key events, themes, or details are missing from this paragraph generated by the AI?” The point is not that “if you can’t beat ’em, join ’em”. The point is that we don’t actually have to fight everything that we don’t have immediate answers for in the first place–The point is that we need to constantly adapt, and that technology is one of the driving forces in our adaptation whether we want to admit it or not.